Abstract:

Final aim of this project is to harness malware detection machine learning (ML) models using deep reinforcement learning (DRL). There are two stages toward the final goal:

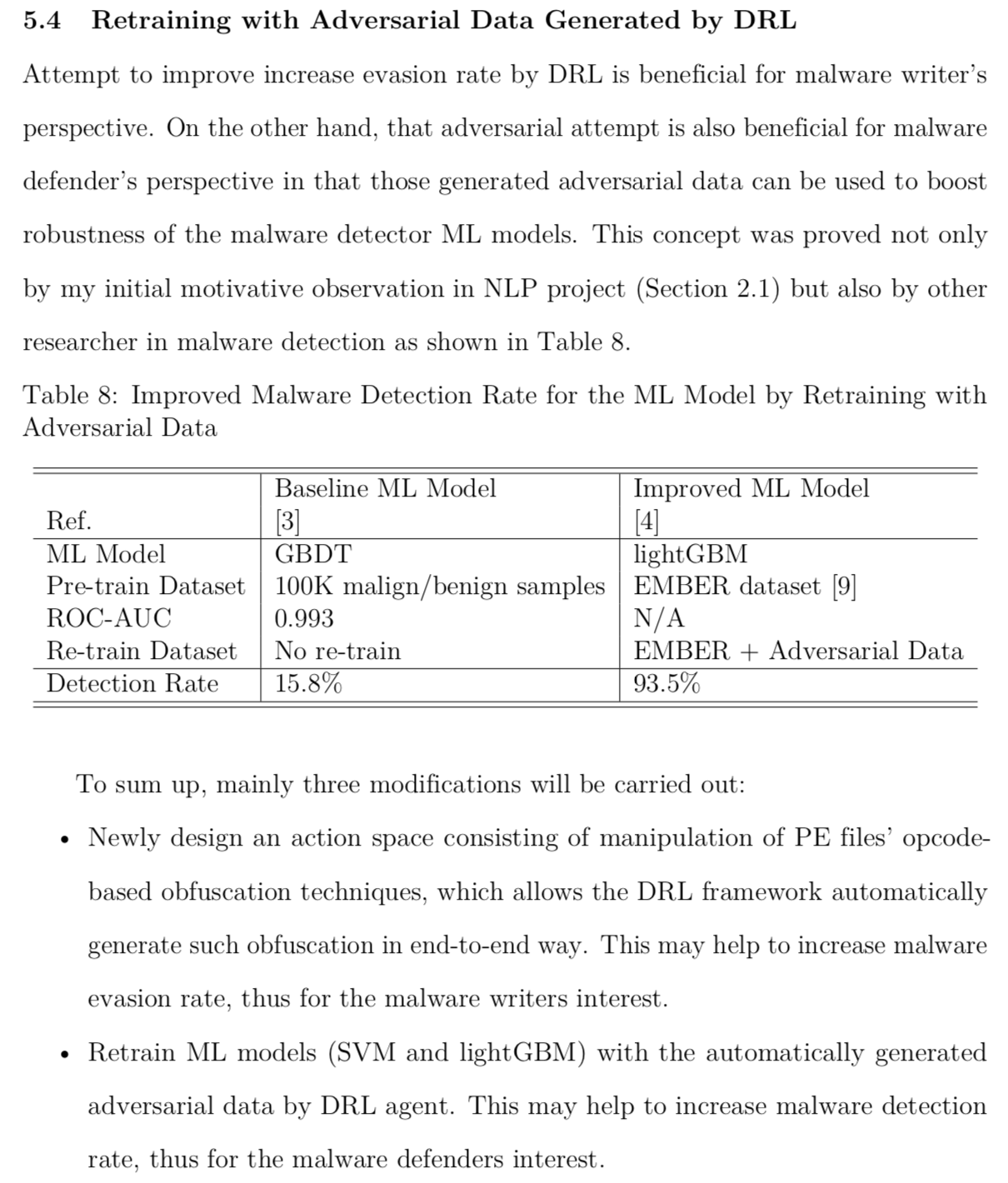

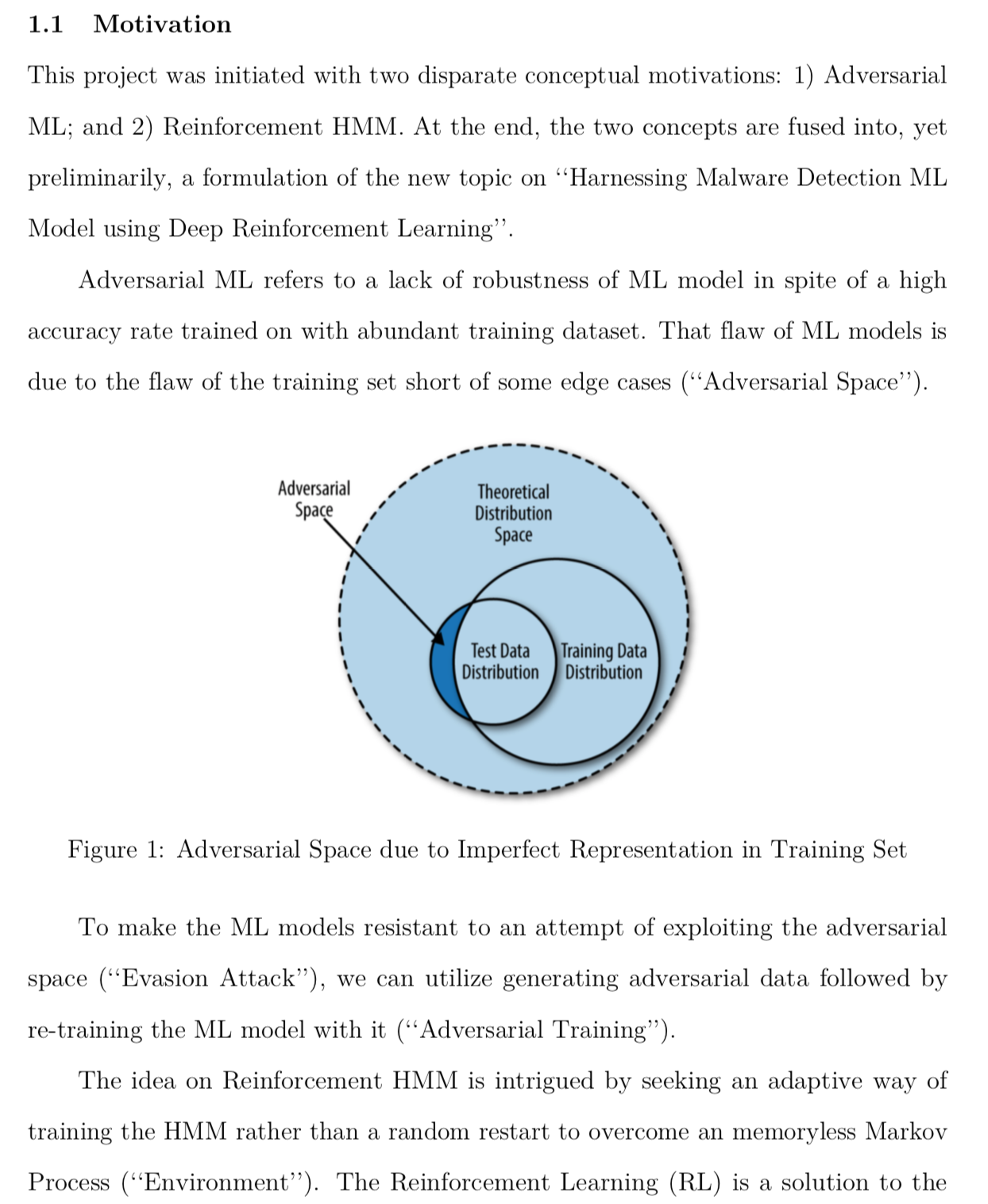

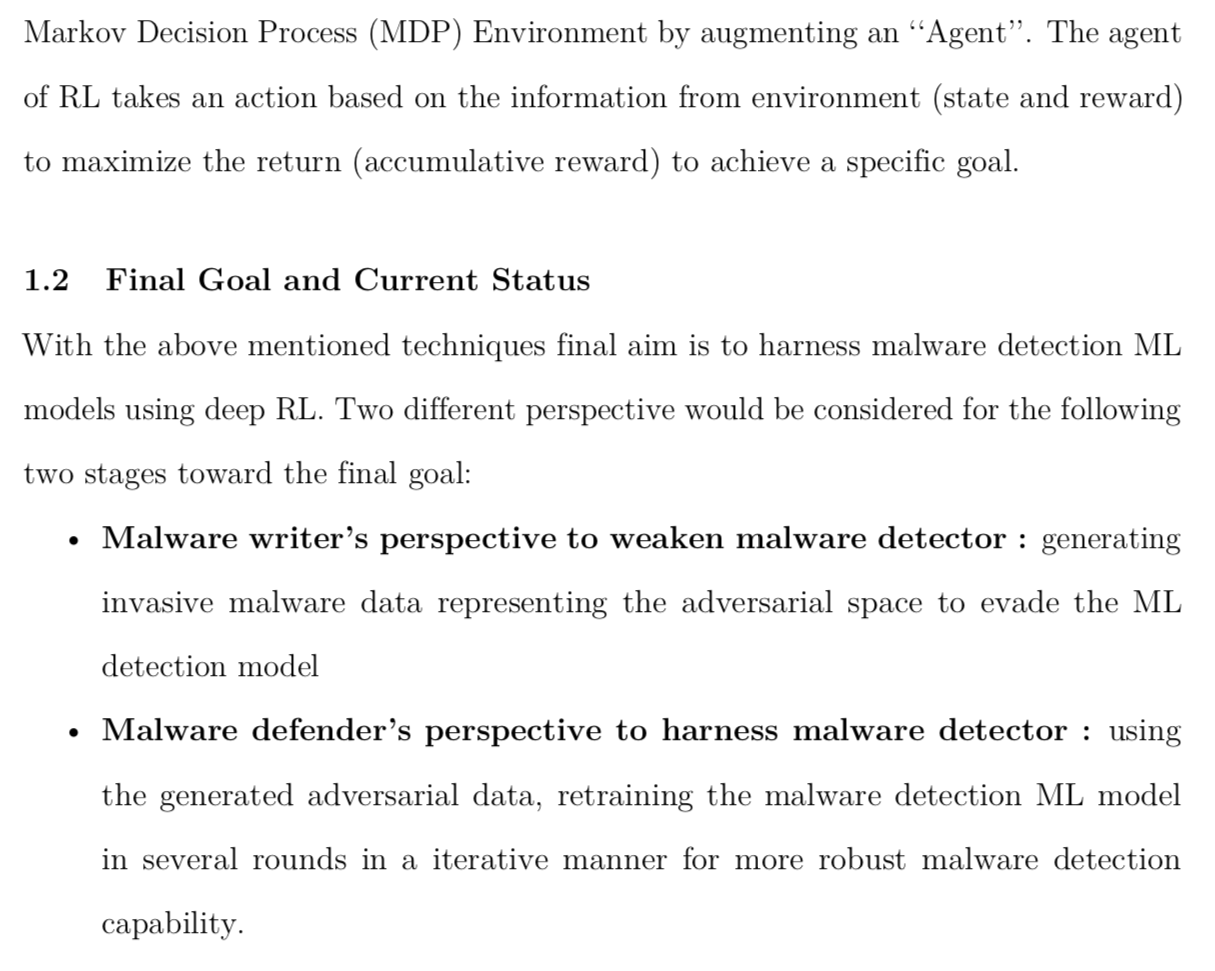

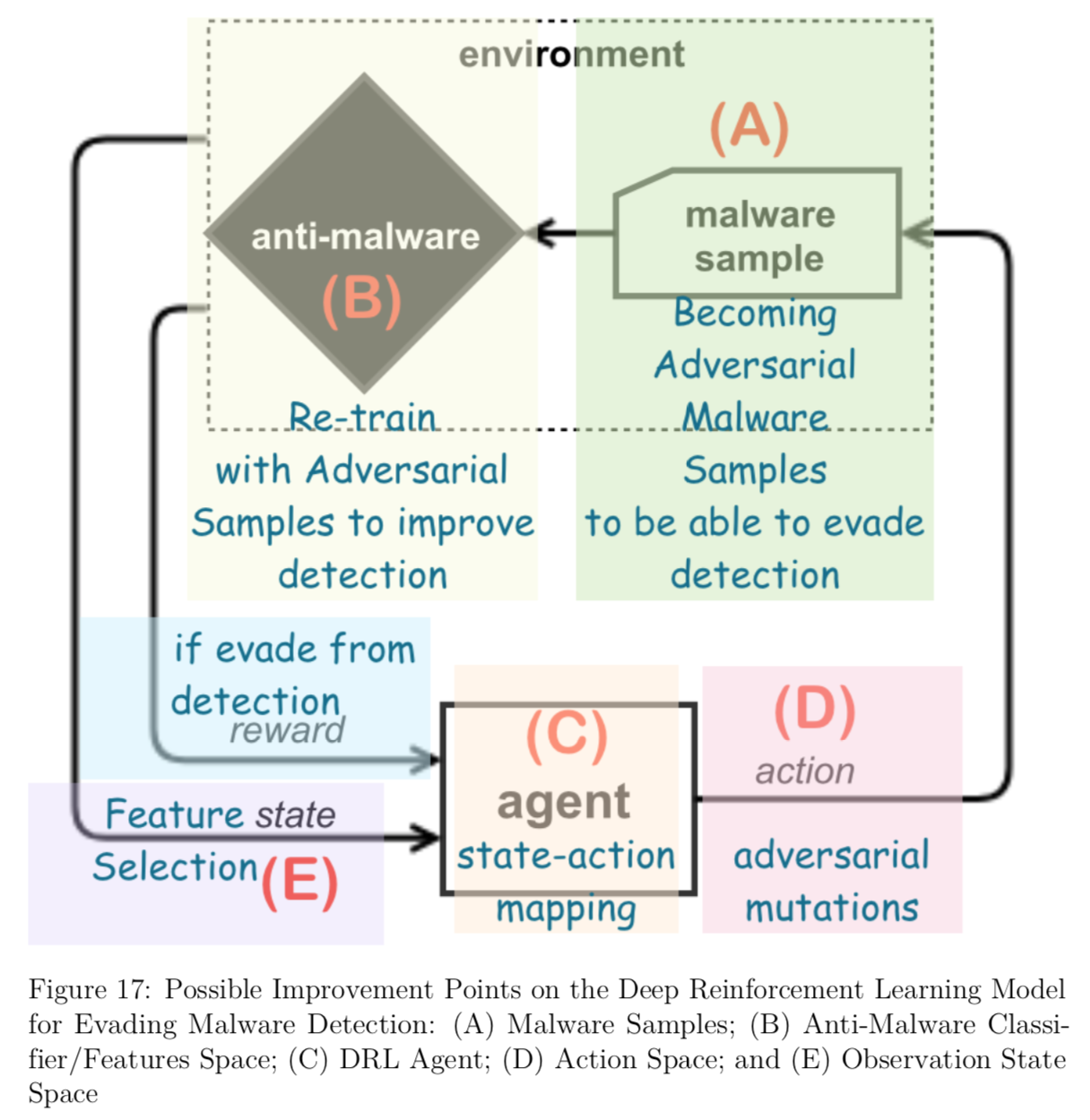

- Malware writer’s perspective to weaken malware detector : generating invasive malware data representing the adversarial space to evade the ML detection model

- Malware defender’s perspective to harness malware detector : using the generated adversarial data, retraining the malware detection ML model in several rounds in a iterative manner for more robust malware detection capability.

Currently this project is at the first phase. The DRL framework let the agent to learn the value-function in end-to-end way (input → black-box → output):

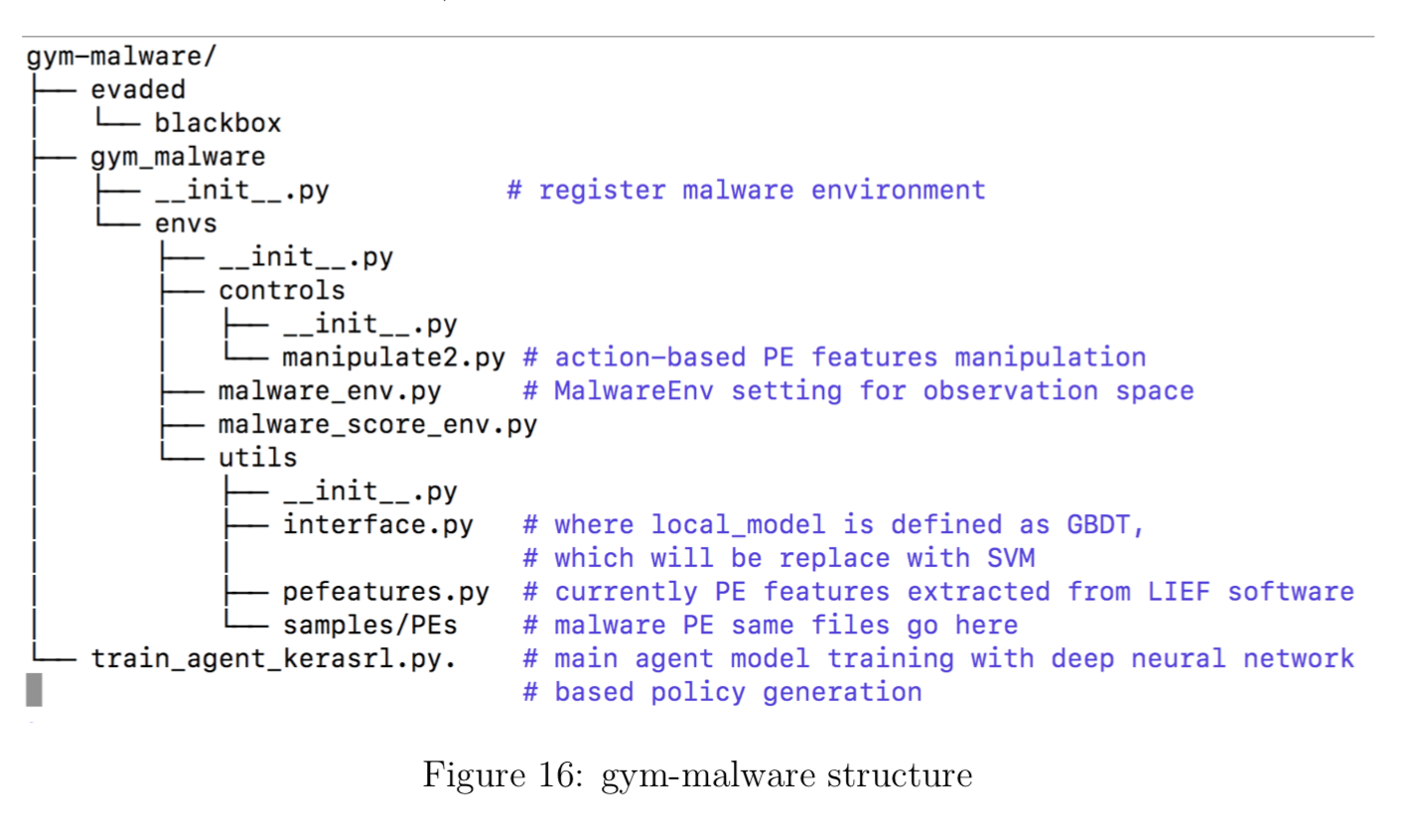

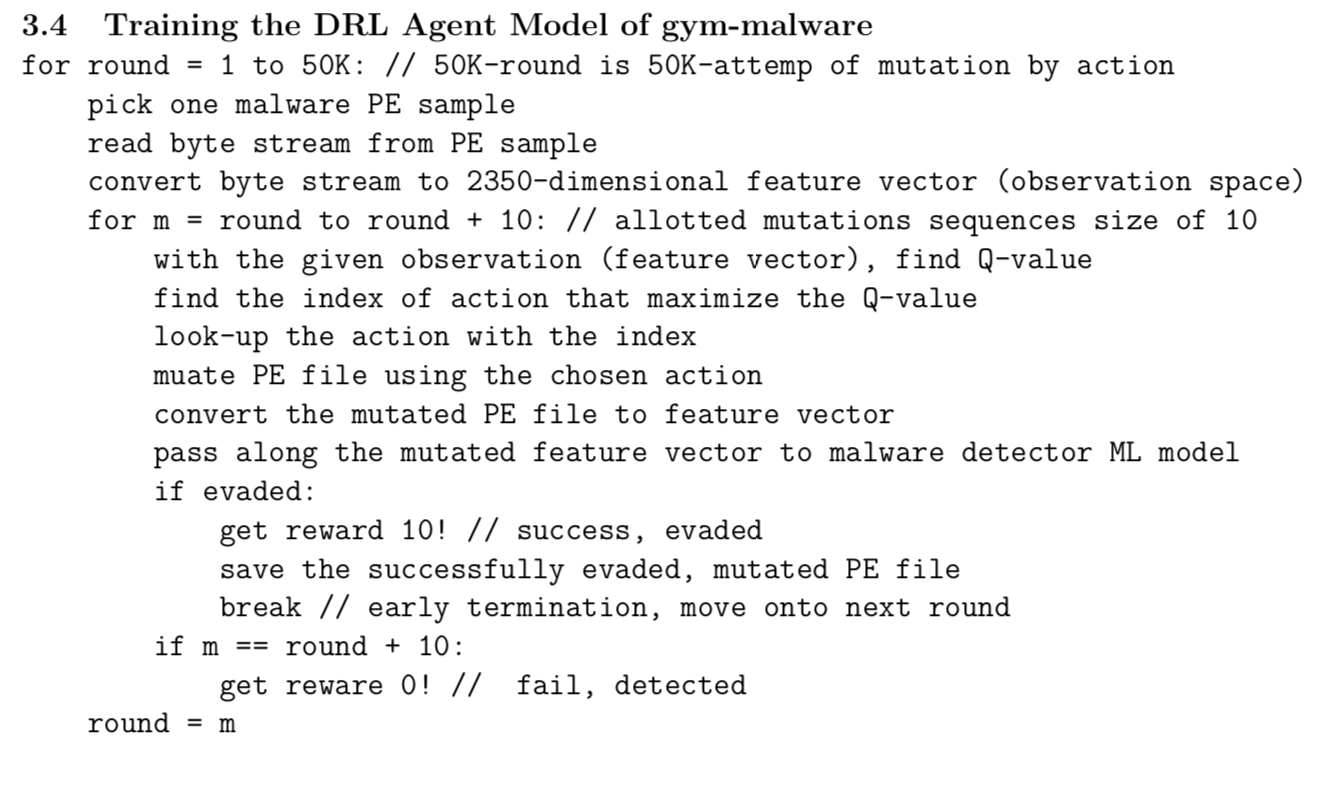

Input of the DRL Environment is malware portable executable (PE) files. Outputs from the DRL Environment are the estimated reward (more reward to the successful evasion); and action-state policy (sequence of state-action mapping, where state is PE feature vectors; action is mutation on the PE files).

The objective function for the DRL Agent to learn is a value-function (i.e. accumulative, discounted reward values) that allows efficiently selecting an action that mutates the PE file to be misclassified as benign (but preserving malign functionality in disguise).

Motivation/Introduction

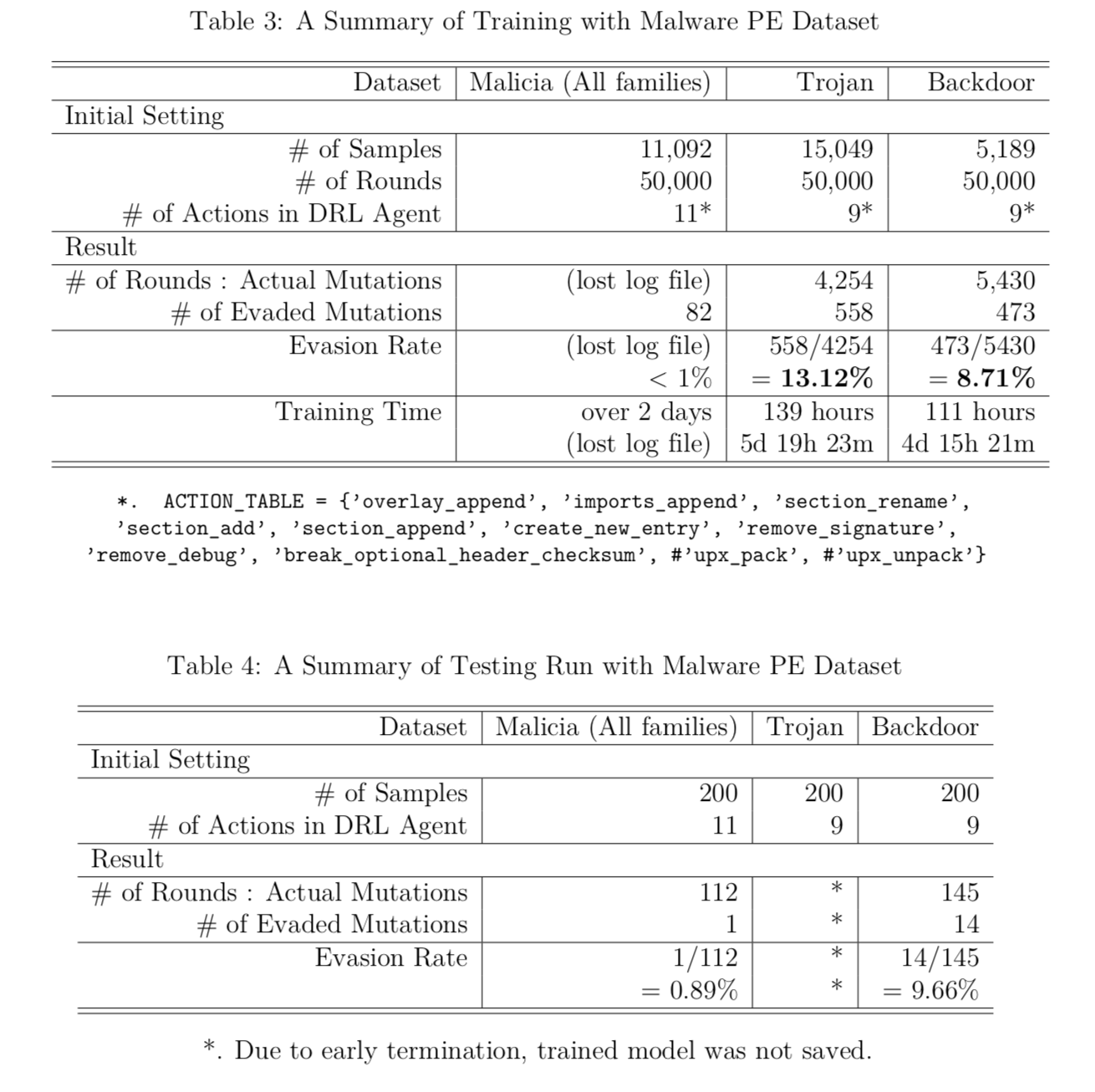

Experiment

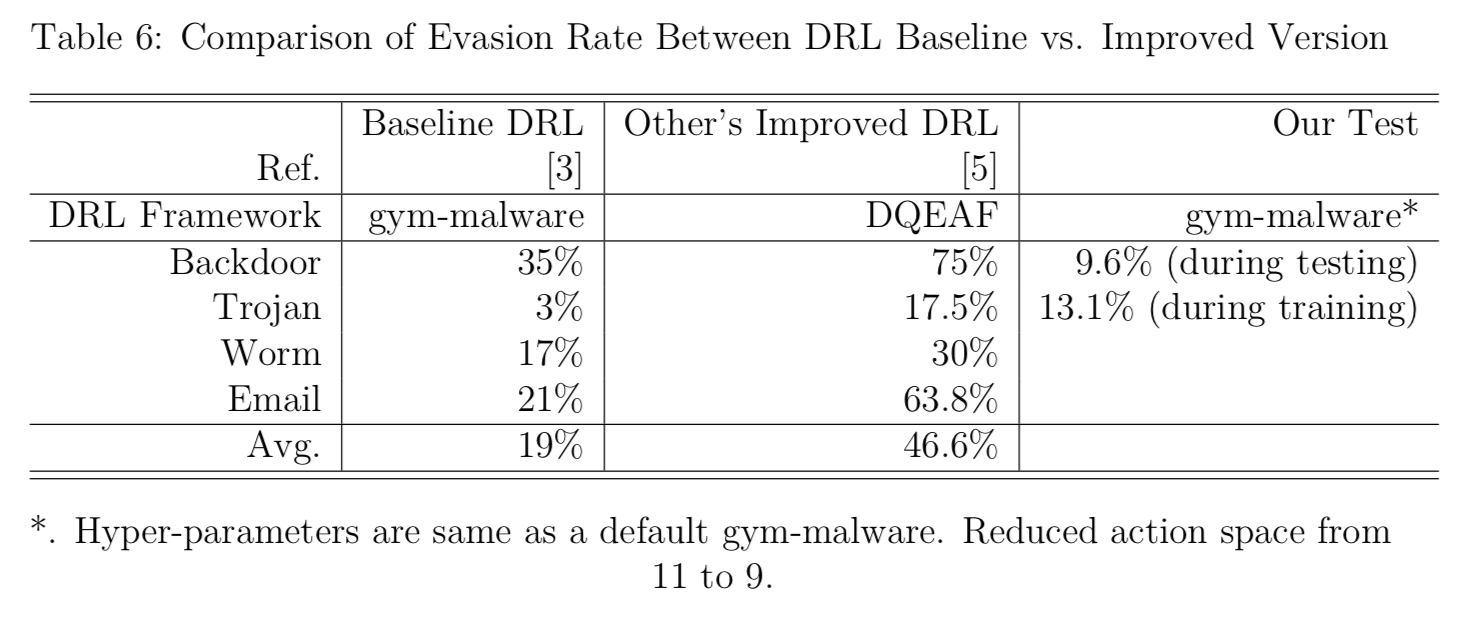

Results

Future Work